TensorRT-Alpha

基于tensorrt+cuda c++实现模型end2end的gpu加速,支持win10、linux,在2023年已经更新模型:YOLOv8, YOLOv7, YOLOv6, YOLOv5, YOLOv4, YOLOv3, YOLOX, YOLOR,pphumanseg,u2net,EfficientDet。

Windows10教程正在制作,可以关注仓库:

https://github.com/FeiYull/TensorRT-Alpha

一、加速结果展示

1.1 性能速览

快速看看yolov8n 在移动端RTX2070m(8G)的新能表现:

modelvideo resolutionmodel input sizeGPU Memory-UsageGPU-Utilyolov8n1920x10808x3x640x6401093MiB/7982MiB14%

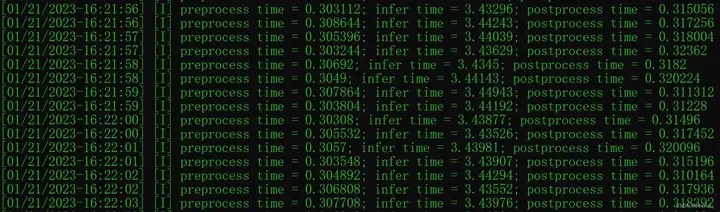

下图是yolov8n的运行时间开销,单位是ms:

yolov8n

更多TensorRT-Alpha测试录像在B站视频:

B站:YOLOv8n

B站:YOLOv8s

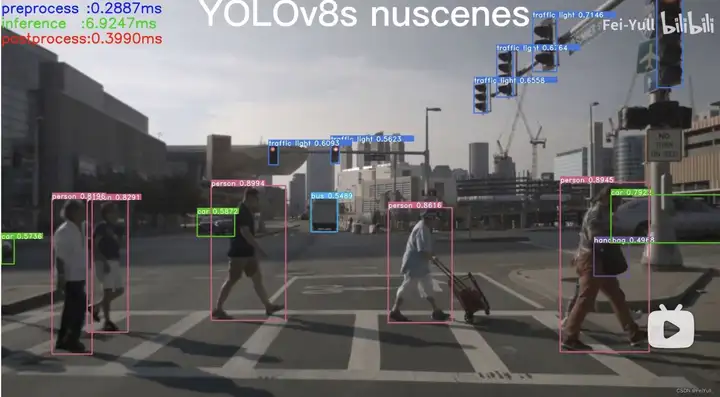

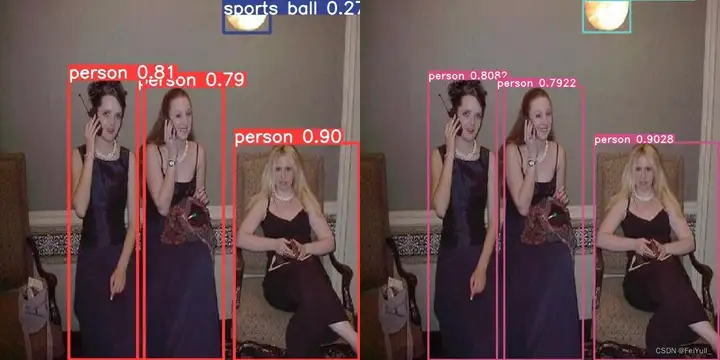

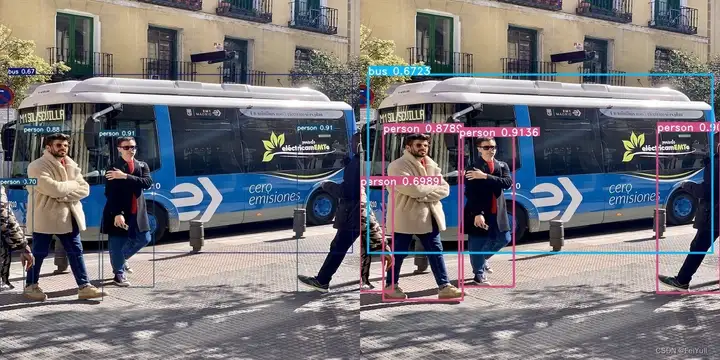

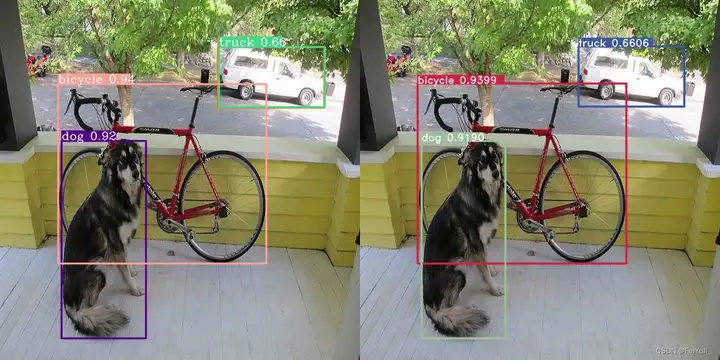

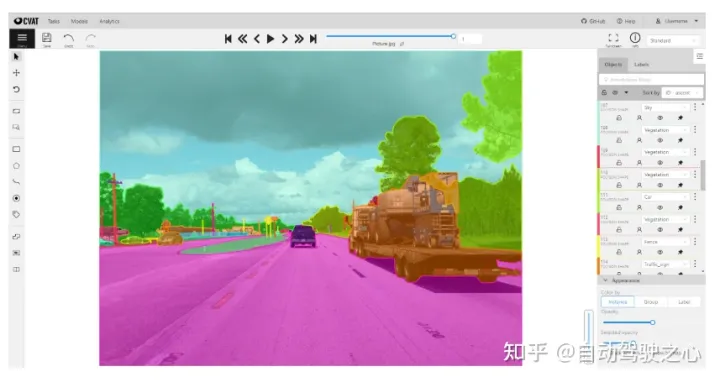

1.2精度对齐

下面是左边是python框架推理结果,右边是TensorRT-Alpha推理结果。

yolov8n : Offical( left ) vs Ours( right )

yolov7-tiny : Offical( left ) vs Ours( right )

yolov6s : Offical( left ) vs Ours( right )

yolov5s : Offical( left ) vs Ours( right )

YOLOv4 YOLOv3 YOLOR YOLOX略。

二、Ubuntu18.04环境配置

如果您对tensorrt不是很熟悉,请务必保持下面库版本一致。

2.1 安装工具链和opencv

sudo apt-get update

sudo apt-get install build-essential

sudo apt-get install git

sudo apt-get install gdb

sudo apt-get install cmake

sudo apt-get install libopencv-dev# pkg-config –modversion opencv

2.2 安装Nvidia相关库

注:Nvidia相关网站需要注册账号。

2.2.1 安装Nvidia显卡驱动

ubuntu-drivers devices

sudo add-apt-repository ppa:graphics-drivers/ppa

sudo apt update

sudo apt install nvidia-driver-470-server# for ubuntu18.04

nvidia-smi

2.2.2 安装 cuda11.3

进入链接:

https://developer.nvidia.com/cu

da-toolkit-archive

选择:CUDA Toolkit 11.3.0(April 2021)选择:[Linux] -> [x86_64] -> [Ubuntu] -> [18.04] -> [runfile(local)]

在网页你能看到下面安装命令,我这里已经拷贝下来:

wget https://developer.download.nvidia.com/compute/cuda/11.3.0/local_installers/cuda_11.3.0_465.19.01_linux.run

sudo sh cuda_11.3.0_465.19.01_linux.run

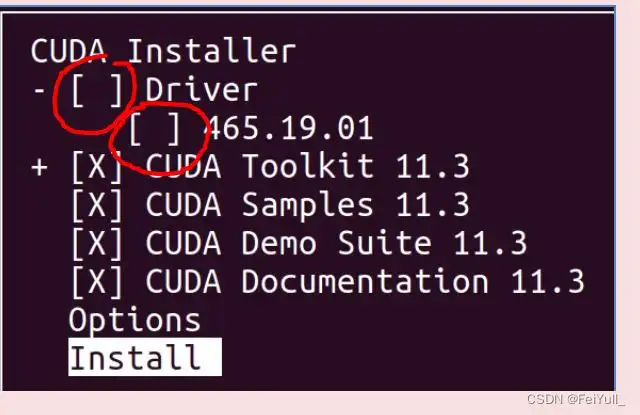

cuda的安装过程中,需要你在bash窗口手动作一些选择,这里选择如下:

select:[continue] -> [accept] -> 接着按下回车键取消Driver和465.19.01这个选项,如下图(it is important!) -> [Install]

在这里插入图片描述

bash窗口提示如下表示安装完成

#===========

#= Summary =

#===========

#Driver: Not Selected

#Toolkit: Installed in /usr/local/cuda-11.3/

#……

把cuda添加到环境变量:

vim ~/.bashrc

把下面拷贝到 .bashrc里面

# cuda v11.3

export PATH=/usr/local/cuda-11.3/bin${PATH:+:${PATH}}

export LD_LIBRARY_PATH=/usr/local/cuda-11.3/lib64${LD_LIBRARY_PATH:+:${LD_LIBRARY_PATH}}

export CUDA_HOME=/usr/local/cuda-11.3

刷新环境变量和验证

source ~/.bashrc

nvcc -V

bash窗口打印如下信息表示cuda11.3安装正常

nvcc: NVIDIA (R)Cuda compiler driver<br>

Copyright(c)2005-2021 NVIDIA Corporation<br>

Built on Sun_Mar_21_19:15:46_PDT_2021<br>

Cuda compilation tools, release 11.3, V11.3.58<br>

Build cuda_11.3.r11.3/compiler.29745058_0<br>

2.2.3 安装 cudnn8.2

进入网站:

https://developer.nvidia.com/rdp/cudnn-archive选择: Download cuDNN v8.2.0 (April 23rd, 2021), for CUDA 11.x选择: cuDNN Library for Linux (x86_64)你将会下载这个压缩包: “cudnn-11.3-linux-x64-v8.2.0.53.tgz”

# 解压

tar -zxvf cudnn-11.3-linux-x64-v8.2.0.53.tgz

将cudnn的头文件和lib拷贝到cuda11.3的安装目录下:

sudo cp cuda/include/cudnn.h /usr/local/cuda/include/

sudo cp cuda/lib64/libcudnn* /usr/local/cuda/lib64/

sudo chmod a+r /usr/local/cuda/include/cudnn.h

sudo chmod a+r /usr/local/cuda/lib64/libcudnn*

2.2.4 下载 tensorrt8.4.2.4

本教程中,tensorrt只需要下载\、解压即可,不需要安装。

进入网站:

https://

developer.nvidia.cn/nvidia-tensorrt-8x-download把这个打勾: I Agree To the Terms of the NVIDIA TensorRT License Agreement选择: TensorRT 8.4 GA Update 1选择: TensorRT 8.4 GA Update 1 for Linux x86_64 and CUDA 11.0, 11.1, 11.2, 11.3, 11.4, 11.5, 11.6 and 11.7 TAR Package你将会下载这个压缩包: “TensorRT-8.4.2.4.Linux.x86_64-gnu.cuda-11.6.cudnn8.4.tar.gz”

# 解压tar -zxvf TensorRT-8.4.2.4.Linux.x86_64-gnu.cuda-11.6.cudnn8.4.tar.gz# 快速验证一下tensorrt+cuda+cudnn是否安装正常

cd TensorRT-8.4.2.4/samples/sampleMNIST

make

cd../../bin/

导出tensorrt环境变量(it is important!),注:将LD_LIBRARY_PATH:后面的路径换成你自己的!后续编译onnx模型的时候也需要执行下面第一行命令

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/home/xxx/temp/TensorRT-8.4.2.4/lib

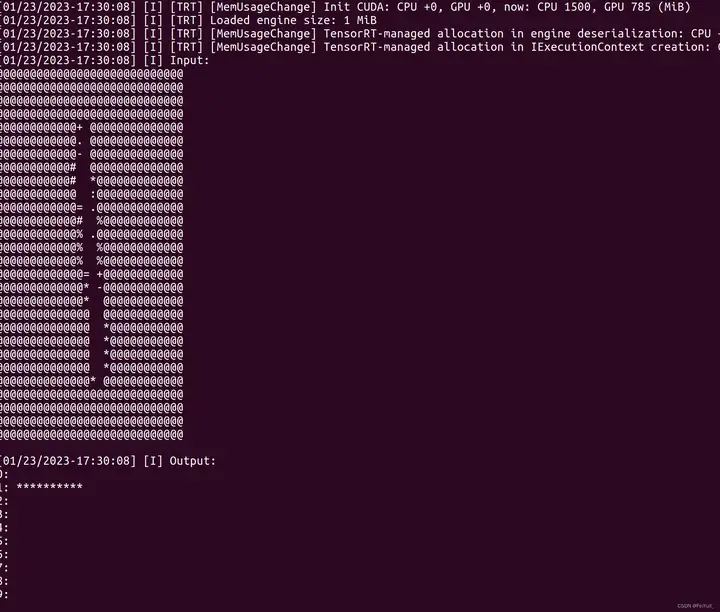

./sample_mnist

bash窗口打印类似如下图的手写数字识别表明cuda+cudnn+tensorrt安装正常

在这里插入图片描述

2.2.5 下载仓库TensorRT-Alpha并设置

git clone https://github.com/FeiYull/tensorrt-alpha

设置您自己TensorRT根目录:

git clone https://github.com/FeiYull/tensorrt-alpha

cd tensorrt-alpha/cmake

vim common.cmake

# 在文件common.cmake中的第20行中,设置成你自己的目录,别和我设置一样的路径eg:

# set(TensorRT_ROOT /root/TensorRT-8.4.2.4)

三、YOLOv8模型部署

3.1 获取onnx文件

直接在网盘下载 weiyun or google driver 或者使用如下命令导出onnx:

# yolov8 官方仓库: https://github.com/ultralytics/ultralytics

# yolov8 官方教程: https://docs.ultralytics.com/quickstart/

# TensorRT-Alpha will be updated synchronously as soon as possible!

# 安装 yolov8

conda create -n yolov8 python==3.8 -y

conda activate yolov8

pip install ultralytics==8.0.5

pip install onnx

# 下载官方权重(“.pt” file)https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8n.pt

https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8s.pt

https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8m.pt

https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8l.pt

https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8x.pt

https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8x6.pt

导出 onnx:

# 640

yolo mode=export model=yolov8n.pt format=onnx dynamic=True #simplify=True

yolo mode=export model=yolov8s.pt format=onnx dynamic=True #simplify=True

yolo mode=export model=yolov8m.pt format=onnx dynamic=True #simplify=True

yolo mode=export model=yolov8l.pt format=onnxdynamic=True #simplify=True

yolo mode=export model=yolov8x.pt format=onnx dynamic=True #simplify=True

# 1280

yolo mode=export model=yolov8x6.pt format=onnx dynamic=True #simplify=True

3.2 编译 onnx

# 把你的onnx文件放到这个路径:tensorrt-alpha/data/yolov8

cd tensorrt-alpha/data/yolov8

# 请把LD_LIBRARY_PATH:换成您自己的路径。

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:~/TensorRT-8.4.2.4/lib

# 640

../../../../TensorRT-8.4.2.4/bin/trtexec –onnx=yolov8n.onnx –saveEngine=yolov8n.trt –buildOnly –minShapes=images:1x3x640x640 –optShapes=images:4x3x640x640 –maxShapes=images:8x3x640x640

../../../../TensorRT-8.4.2.4/bin/trtexec –onnx=yolov8s.onnx –saveEngine=yolov8s.trt –buildOnly –minShapes=images:1x3x640x640 –optShapes=images:4x3x640x640 –maxShapes=images:8x3x640x640

../../../../TensorRT-8.4.2.4/bin/trtexec –onnx=yolov8m.onnx –saveEngine=yolov8m.trt –buildOnly –minShapes=images:1x3x640x640 –optShapes=images:4x3x640x640 –maxShapes=images:8x3x640x640

../../../../TensorRT-8.4.2.4/bin/trtexec –onnx=yolov8l.onnx –saveEngine=yolov8l.trt –buildOnly –minShapes=images:1x3x640x640 –optShapes=images:4x3x640x640 –maxShapes=images:8x3x640x640

../../../../TensorRT-8.4.2.4/bin/trtexec –onnx=yolov8x.onnx –saveEngine=yolov8x.trt –buildOnly –minShapes=images:1x3x640x640 –optShapes=images:4x3x640x640 –maxShapes=images:8x3x640x640

# 1280

../../../../TensorRT-8.4.2.4/bin/trtexec –onnx=yolov8x6.onnx –saveEngine=yolov8x6.trt –buildOnly –minShapes=images:1x3x1280x1280 –optShapes=images:4x3x1280x1280 –maxShapes=images:8x3x1280x1280

你将会的到例如:yolov8n.trt、yolov8s.trt、yolov8m.trt等文件。

3.3 编译运行

git clone https://github.com/FeiYull/tensorrt-alphacd tensorrt-alpha/yolov8

mkdir build

cdbuild

cmake ..

make -j10# 注: 效果图默认保存在路径 tensorrt-alpha/yolov8/build

# 下面参数解释

# –show 表示可视化结果

# –savePath 表示保存,默认保存在build目录

# –savePath=../ 保存在上一级目录

## 640

# 推理图片

./app_yolov8 –model=../../data/yolov8/yolov8n.trt –size=640 –batch_size=1 –img=../../data/6406407.jpg –show –savePath

./app_yolov8 –model=../../data/yolov8/yolov8n.trt –size=640 –batch_size=8 –video=../../data/people.mp4 –show –savePath# 推理视频

./app_yolov8 –model=../../data/yolov8/yolov8n.trt –size=640 –batch_size=8–video=../../data/people.mp4 –show –savePath=../

# 在线推理相机视频

./app_yolov8 –model=../../data/yolov8/yolov8n.trt –size=640 –batch_size=2 –cam_id=0 –show

## 1280

# infer camera

./app_yolov8 –model=../../data/yolov8/yolov8x6.trt –size=1280 –batch_size=2 –cam_id=0 –show

四、参考

https://github.com/FeiYull/TensorRT-Alpha

本文使用

Zhihu On VSCode 创作并发布

暂无评论内容