部署步骤

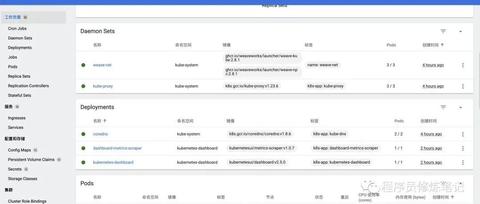

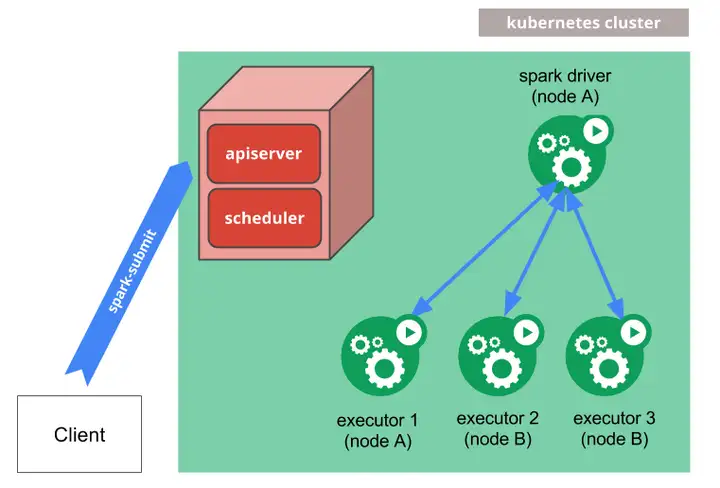

3台Linux机器(这里我用的是Ubuntu的虚拟机)初始化3台机器三台机器安装Docker、Kubeadm部署Master部署网络插件Weave部署两个Worker部署可视化插件Dashboard部署存储插件Rook

系统初始化

关闭Swap

$ swapoff -a

$ sed -i / swap / s/^\(.*\)$/#\1/g /etc/fstab

关闭防火墙

$ ufw disable

$ cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf

br_netfilter

EOF

$ cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

$ sysctl –system

$ cat >> /etc/hosts << EOF

172.16.56.133 k8s-master

172.16.56.134 k8s-node-01

172.16.56.135 k8s-node-02

EOF

安装Kubeadm

$ curl https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | apt-key add –

$ cat <<EOF > /etc/apt/sources.list.d/kubernetes.list

deb https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main

EOF

$ apt-get update

$ apt-get install -y docker.io kubeadm

上述命令执行完成以后,kubeadm、kubelet、kubectl、kubernetes-cni这些二进制文件都会被自动安装好。

修改Docker配置

$ cat <<EOF | sudo tee /etc/docker/daemon.json

{

“exec-opts”: [“native.cgroupdriver=systemd”],

“log-driver”: “json-file”,

“log-opts”: {

“max-size”: “100m”

},

“storage-driver”: “overlay2”

}

EOF

$ systemctl restart docker

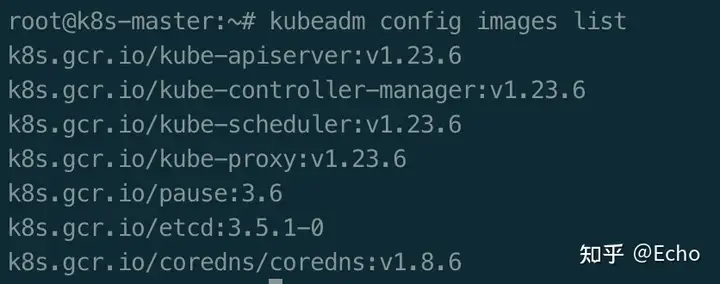

提前准备镜像

$ kubeadm config images list

image.png

set -o errexit

set -o nounset

set -o pipefail

##这里定义版本,按照上面得到的列表自己改一下版本号

KUBE_VERSION=v1.23.6

KUBE_PAUSE_VERSION=3.6

ETCD_VERSION=3.5.1-0

DNS_VERSION=v1.8.6

##这是原始仓库名,最后需要改名成这个

GCR_URL=k8s.gcr.io

##这里就是写你要使用的仓库

DOCKERHUB_URL=k8simage

##这里是镜像列表,新版本要把coredns改成coredns/coredns

images=(

kube-proxy:${KUBE_VERSION}

kube-scheduler:${KUBE_VERSION}

kube-controller-manager:${KUBE_VERSION}

kube-apiserver:${KUBE_VERSION}

pause:${KUBE_PAUSE_VERSION}

etcd:${ETCD_VERSION}

coredns:${DNS_VERSION}

)

##这里是拉取和改名的循环语句

for imageName in ${images[@]} ; do

docker pull $DOCKERHUB_URL/$imageName

docker tag $DOCKERHUB_URL/$imageName $GCR_URL/$imageName

docker rmi $DOCKERHUB_URL/$imageName

done

docker tag k8s.gcr.io/coredns:v1.8.6 k8s.gcr.io/coredns/coredns:v1.8.6

docker rmi k8s.gcr.io/coredns:v1.8.6

上述脚本保存成pull_k8s_image.sh。

$ chmod +x ./pull_k8s_image.sh

$ ./pull_k8s_image.sh

Kubeadm Master

$ kubeadm init

$ mkdir -p $HOME/.kube

$ sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

$ sudo chown $(id -u):$(id -g) $HOME/.kube/config

Master在安装成功以后还会输出以下内容:

$ kubeadm join 172.16.56.133:6443 –token b19ftk.uc7t11gdkou56kdj \

–discovery-token-ca-cert-hash sha256:a57125da9f1180ee1ce322cf04d28503be59746135aca20c1a97ca6aaa5a86d6

上述内容主要是Worker Node加入集群的时候使用。

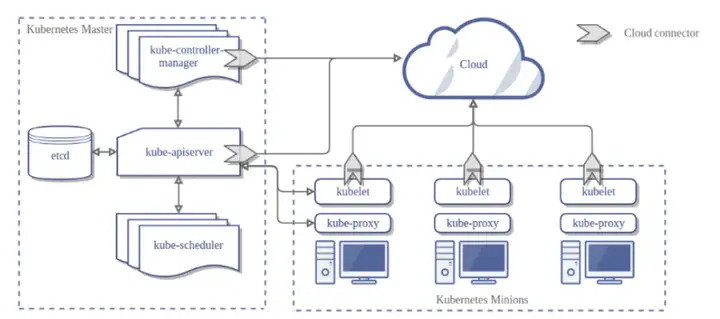

kubeam在init命令后主要有以下逻辑:

一系列的检查工作,确定本台机器可以部署Kubernetes生成Kubernetes对外提供服务的所需的各种证书和对应的目录为其他组件生成访问kube-apiserver所需的配置文件为Master组件生成Pod配置文件,kube-apiserver、kube-controller-manager、kube-scheduler,根据配置文件会自动创建Podkubeadm通过localhost:6443/healthz这个健康检测URL,等待master的组件完全启动为集群生成一个bootstrap token,只要拥有该token,任何一个安装了kubelet和kubeadm的节点都可以通过kubeadm join命令加入集群token生成以后,kubeadm会将ca.crt等Master节点的信息通过ConfigMap保存到etcd中,该ConfigMap的名字是cluster-info安装默认插件,默认安装kube-proxy和coredns,用来提供整个集群的服务发现和负载均衡

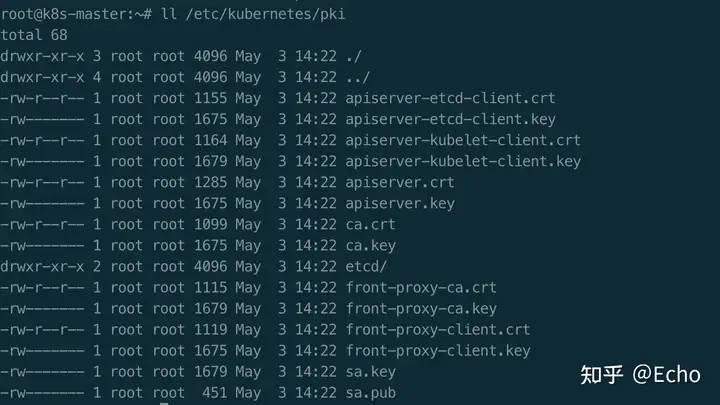

生成的证书存放在哪里?

/etc/kubernetes/pki目录下:

image.png

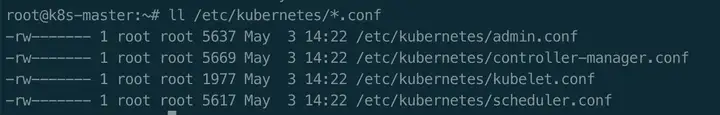

其他组件访问kube-apiserver的配置文件生成在哪里?

/etc/kubernetes/xxx.conf

image.png

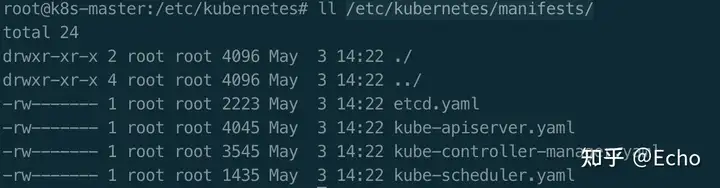

Master组件的YAML定义存放在哪里?

/etc/kubernetes/manifests/目录下:

image.png

当kubelet启动时,会检测该目录下的所有Pod文件,并加载启动他们,该启动方法称之为Static Pod。

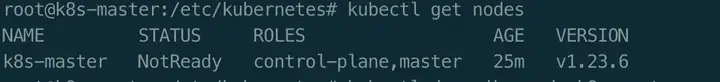

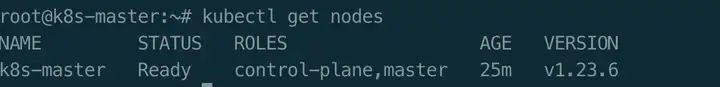

$ kubectl get nodes

$ kubectl describe node k8s-master

通过上图可以看出,Node处于NotReady状态的原因是未部署任何的网络插件。

# 查看k8s默认工作空间(kube-system)的各个Pod的状态

$ kubectl get pods -n kube-system

image.png

部署网络插件

$ docker pull weaveworks/weave-kube:2.8.1

$ docker tag weaveworks/weave-kube:2.8.1 ghcr.io/weaveworks/launcher/weave-kube:2.8.1

$ docker rmi weaveworks/weave-kube:2.8.1

$ docker pull weaveworks/weave-npc:2.8.1

$ docker tag weaveworks/weave-npc:2.8.1 ghcr.io/weaveworks/launcher/weave-npc:2.8.1

$ docker rmi weaveworks/weave-npc:2.8.1

$ kubectl apply -f “https://cloud.weave.works/k8s/net?k8s-version=$(kubectl version | base64 | tr -d \n)”

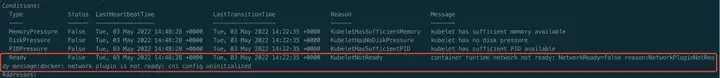

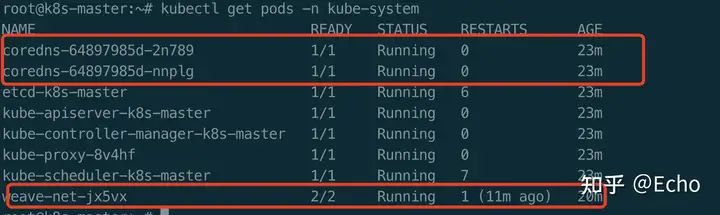

$ kubectl get pods -n kube-system

$ kubectl get nodes

在我们的网络插件部署成功以后,coredns的Pod状态变为Running,并且master节点也变为了Ready状态。

Kubernetes WorkNode部署

$ docker pull k8simage/pause:3.6

$ docker tag k8simage/pause:3.6 k8s.gcr.io/pause:3.6

$ docker rmi k8simage/pause:3.6

$ docker pull k8simage/kube-proxy:v1.23.6

$ docker tag k8simage/kube-proxy:v1.23.6 k8s.gcr.io/kube-proxy:v1.23.6

$ docker rmi k8simage/kube-proxy:v1.23.6

$ docker pull weaveworks/weave-kube:2.8.1

$ docker tag weaveworks/weave-kube:2.8.1 ghcr.io/weaveworks/launcher/weave-kube:2.8.1

$ docker rmi weaveworks/weave-kube:2.8.1

$ docker pull weaveworks/weave-npc:2.8.1

$ docker tag weaveworks/weave-npc:2.8.1 ghcr.io/weaveworks/launcher/weave-npc:2.8.1

$ docker rmi weaveworks/weave-npc:2.8.1

$ kubeadm join 172.16.56.133:6443 –token b19ftk.uc7t11gdkou56kdj \

–discovery-token-ca-cert-hash sha256:a57125da9f1180ee1ce322cf04d28503be59746135aca20c1a97ca6aaa5a86d6

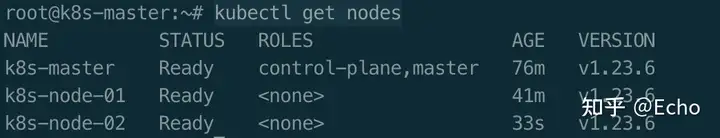

两个WorkNode部署成功以后我们在Master节点上执行以下命令:

$ kubectl get nodes

image.png

通过Taint/Toleration调整Master执行 Pod的策略

默认情况下我们通常不允许在master节点上运行用的Pod,我们可以借助Kubernetes的Taint/Toleration实现该目标。

Taint/Toleration的原理?

一旦某个节点被加上了一个Taint,即被“打上了污点”,那么所有Pod就都不能在这个节点上运行,因为Kubernetes的Pod都有“洁癖”。除非,有个别的Pod 声明自己能容忍这个“污点”,即声明了Toleration,它才可以在这个节点上运行。

如何打污点?

$ kubectl taint nodes node1 foo=bar:NoSchedule

node1节点上就会增加一个键值对格式的Taint,即:foo=bar:NoSchedule。其中值里面的NoSchedule,意味着这个Taint只会在调度新Pod时产生作用,而不会影响已经在node1上运行的Pod,哪怕它们没有Toleration。

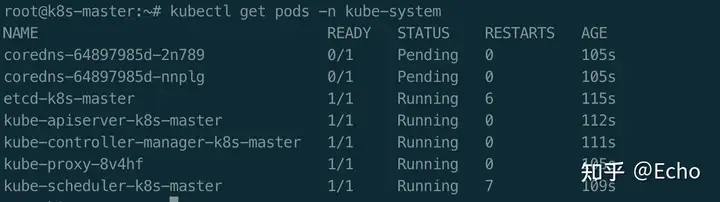

查看master节点上的Taint?

$ kubectl describe node k8s-master

image.png

Master节点默认被加上了http://node-role.kubernetes.io/master:NoSchedule这样一个“污点”,其中键是http://node-role.kubernetes.io/master,而没有提供值。

部署可视化插件

$ kubectl create clusterrolebinding serviceaccount-cluster-admin –clusterrole=cluster-admin –group=system:serviceaccount

$ kubectl create clusterrolebinding serviceaccount-cluster-admin –clusterrole=cluster-admin –user=system:serviceaccount:kubernetes-dashboard:kubernetes-dashboard

$ kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.5.0/aio/deploy/recommended.yaml

上述命令可以直接安装可视化界面,如果暂时不能科学冲浪,那么可以使用下面的YAML进行部署:

apiVersion: v1

kind: Namespace

metadata:

name: kubernetes-dashboard

—

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

—

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

ports:

– port: 443

targetPort: 8443

selector:

k8s-app: kubernetes-dashboard

—

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-certs

namespace: kubernetes-dashboard

type: Opaque

—

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-csrf

namespace: kubernetes-dashboard

type: Opaque

data:

csrf: “”

—

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-key-holder

namespace: kubernetes-dashboard

type: Opaque

—

kind: ConfigMap

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-settings

namespace: kubernetes-dashboard

—

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

rules:

# Allow Dashboard to get, update and delete Dashboard exclusive secrets.

– apiGroups: [“”]

resources: [“secrets”]

resourceNames: [“kubernetes-dashboard-key-holder”, “kubernetes-dashboard-certs”, “kubernetes-dashboard-csrf”]

verbs: [“get”, “update”, “delete”]

# Allow Dashboard to get and update kubernetes-dashboard-settings config map.

– apiGroups: [“”]

resources: [“configmaps”]

resourceNames: [“kubernetes-dashboard-settings”]

verbs: [“get”, “update”]

# Allow Dashboard to get metrics.

– apiGroups: [“”]

resources: [“services”]

resourceNames: [“heapster”, “dashboard-metrics-scraper”]

verbs: [“proxy”]

– apiGroups: [“”]

resources: [“services/proxy”]

resourceNames: [“heapster”, “http:heapster:”, “https:heapster:”, “dashboard-metrics-scraper”, “http:dashboard-metrics-scraper”]

verbs: [“get”]

—

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

rules:

# Allow Metrics Scraper to get metrics from the Metrics server

– apiGroups: [“metrics.k8s.io”]

resources: [“pods”, “nodes”]

verbs: [“get”, “list”, “watch”]

—

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubernetes-dashboard

subjects:

– kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

—

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kubernetes-dashboard

subjects:

– kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

—

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

spec:

securityContext:

seccompProfile:

type: RuntimeDefault

containers:

– name: kubernetes-dashboard

image: kubernetesui/dashboard:v2.5.0

imagePullPolicy: Always

ports:

– containerPort: 8443

protocol: TCP

args:

– –auto-generate-certificates

– –namespace=kubernetes-dashboard

# Uncomment the following line to manually specify Kubernetes API server Host

# If not specified, Dashboard will attempt to auto discover the API server and connect

# to it. Uncomment only if the default does not work.

# – –apiserver-host=http://my-address:port

volumeMounts:

– name: kubernetes-dashboard-certs

mountPath: /certs

# Create on-disk volume to store exec logs

– mountPath: /tmp

name: tmp-volume

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

volumes:

– name: kubernetes-dashboard-certs

secret:

secretName: kubernetes-dashboard-certs

– name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

nodeSelector:

“kubernetes.io/os”: linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

– key: node-role.kubernetes.io/master

effect: NoSchedule

—

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

ports:

– port: 8000

targetPort: 8000

selector:

k8s-app: dashboard-metrics-scraper

—

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: dashboard-metrics-scraper

template:

metadata:

labels:

k8s-app: dashboard-metrics-scraper

spec:

securityContext:

seccompProfile:

type: RuntimeDefault

containers:

– name: dashboard-metrics-scraper

image: kubernetesui/metrics-scraper:v1.0.7

ports:

– containerPort: 8000

protocol: TCP

livenessProbe:

httpGet:

scheme: HTTP

path: /

port: 8000

initialDelaySeconds: 30

timeoutSeconds: 30

volumeMounts:

– mountPath: /tmp

name: tmp-volume

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

serviceAccountName: kubernetes-dashboard

nodeSelector:

“kubernetes.io/os”: linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

– key: node-role.kubernetes.io/master

effect: NoSchedule

volumes:

– name: tmp-volume

emptyDir: {}

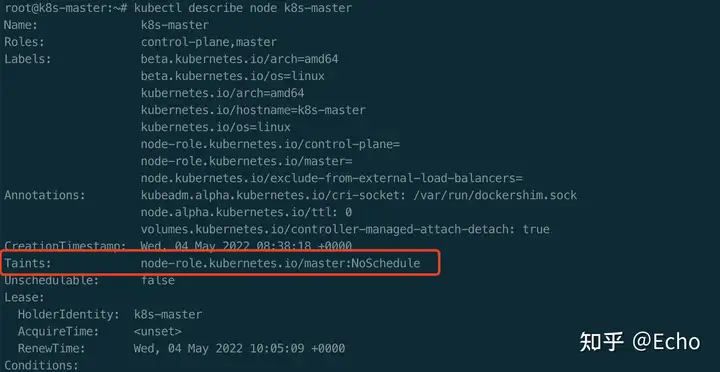

image.png

如何访问dashboard?

在master节点执行以下命令

$ kubectl proxy –address=0.0.0.0

在本机执行以下命令

$ ssh -L 8001:172.16.56.133:8001 k8s@172.16.56.133

浏览器访问以下DashBoard地址

image.png

如何获取Token?

$ kubectl -n kubernetes-dashboard describe secret $(kubectl -n kubernetes-dashboard get secret | grep kubernetes-dashboard-token | awk {print $1})

image.png

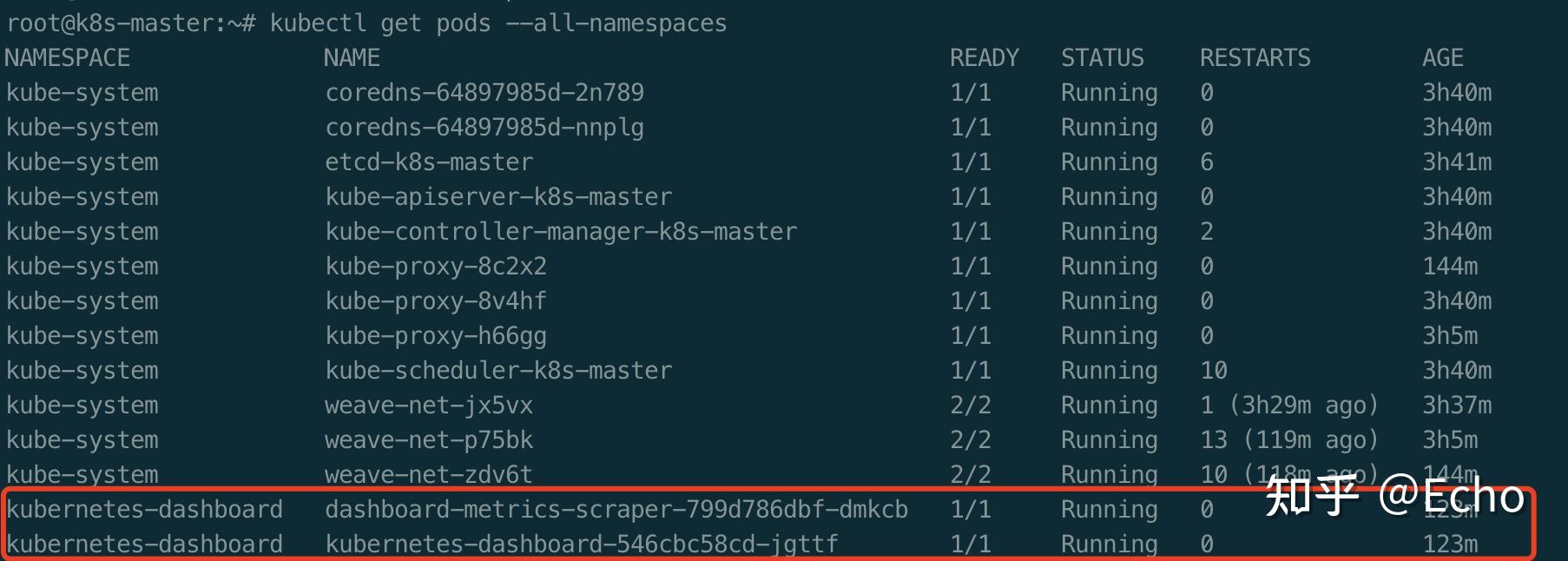

登录成功以后就可以看到如下页面:

image.png

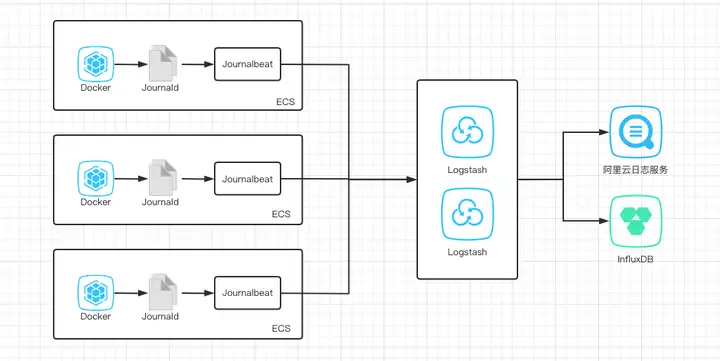

部署存储插件

容器的持久化存储是用来保持容器状态的重要手段,存储插件会在容器里挂载一个基于网络或者其他机制的远程数据卷,使得容器里创建的文件实际上是保存在远程存储服务器上,这样无论在哪个宿主机上启动新的容器,都可以请求挂载指定持久化存储卷,从而访问到数据卷里面的内容。

$ docker pull objectscale/csi-node-driver-registrar:v2.5.0

$ docker tag objectscale/csi-node-driver-registrar:v2.5.0 k8s.gcr.io/sig-storage/csi-node-driver-registrar:v2.5.0

$ docker rmi objectscale/csi-node-driver-registrar:v2.5.0

$ docker pull objectscale/csi-provisioner:v3.1.0

$ docker tag objectscale/csi-provisioner:v3.1.0 k8s.gcr.io/sig-storage/csi-provisioner:v3.1.0

$ docker rmi objectscale/csi-provisioner:v3.1.0

$ docker pull objectscale/csi-resizer:v1.4.0

$ docker tag objectscale/csi-resizer:v1.4.0 k8s.gcr.io/sig-storage/csi-resizer:v1.4.0

$ docker rmi objectscale/csi-resizer:v1.4.0

$ docker pull longhornio/csi-attacher:v3.2.1

$ docker tag longhornio/csi-attacher:v3.2.1 k8s.gcr.io/sig-storage/csi-attacher:v3.4.0

$ docker rmi longhornio/csi-attacher:v3.2.1

$ docker pull longhornio/csi-snapshotter:v3.0.3

$ docker tag longhornio/csi-snapshotter:v3.0.3 k8s.gcr.io/sig-storage/csi-snapshotter:v5.0.1

$ docker rmi longhornio/csi-snapshotter:v3.0.3

上面的命令均是为了准备镜像(如果可以科学冲浪的同学请忽略),直接执行下面的命令即可。

$ git clone –single-branch –branch v1.9.2 https://github.com/rook/rook.git

$ cd rook/deploy/examples

$ kubectl apply -f crds.yaml -f common.yaml -f operator.yaml

$ kubectl apply -f cluster.yaml

当我们部署存储插件以后,整个Kubernetes集群就搭建完成了,一个崭新的Kubernetes集群就映入眼帘。

image.png

暂无评论内容